DebateLab@KIT computational philosophy projects

Natural-Language Multi-Agent Simulations of Argumentative Opinion Dynamics

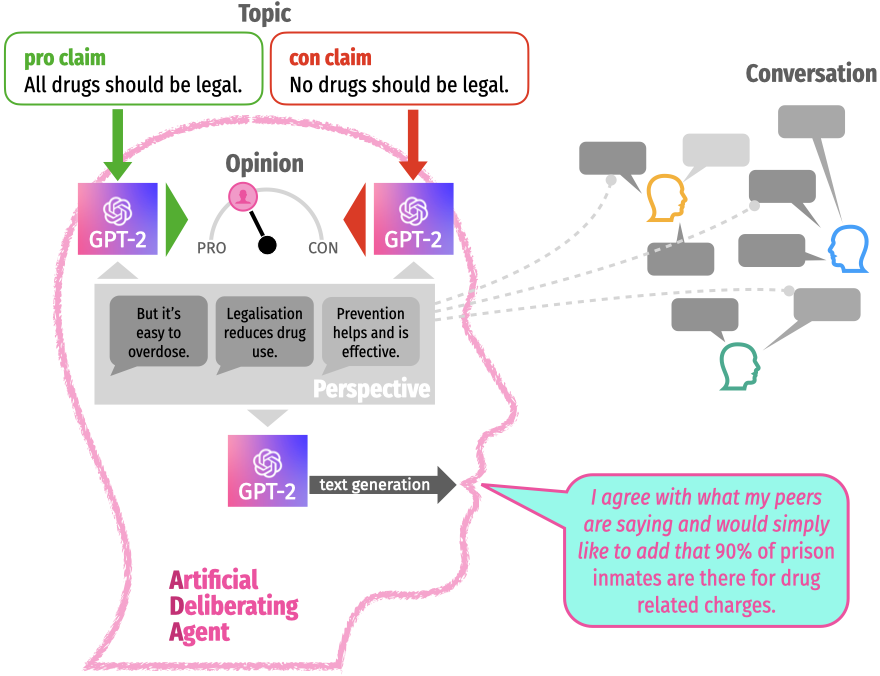

In our recent paper, we develop a natural-language agent-based model of argumentation (ABMA). Its artificial deliberative agents (ADAs) are constructed with the help of so-called neural language models recently developed in AI and computational linguistics (and which we’ve explored here and here). ADAs are equipped with a minimalist belief system and may generate and submit novel contributions to a conversation.

The natural-language ABMA allows us to simulate collective deliberation in English, i.e. with arguments, reasons, and claims themselves – rather than with their mathematical representations (as in formal models).

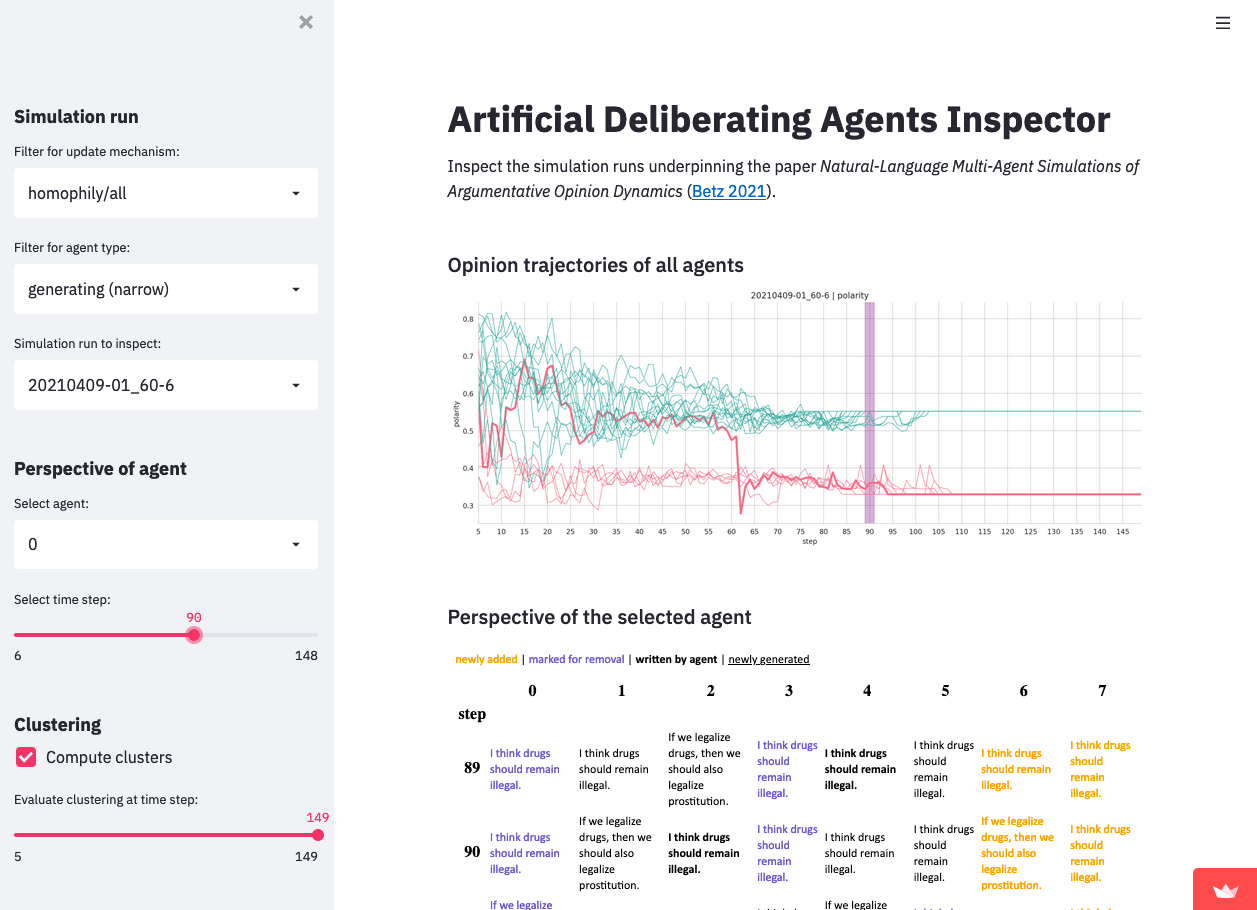

We use the natural-language ABMA to test the robustness of formal reason-balancing models of argumentation [Maes & Flache 2013, Singer et al. 2019]: First of all, as long as ADAs remain passive, confirmation bias and homophily updating trigger polarization, which is consistent with results from formal models. However, once ADAs start to actively generate new contributions, the evolution of a conversation is dominated by properties of the agents as authors. This suggests that the creation of new arguments, reasons, and claims critically affects a conversation and is of pivotal importance for understanding the dynamics of collective deliberation.

You can explore the simulation runs with our streamlit app:

The paper has been published with JASSS, the preprint is still available here.

Written on April 15th, 2021 by Gregor Betz