DebateLab@KIT computational philosophy projects

Deep Argument Analysis with Transformers

Transformers are dramatically pushing the boundaries of machines’ natural language reasoning abilities. We’ve asked: Can we use current pre-trained language models for reconstructing arguments? Argument reconstruction is a central critical thinking skill (see for example Joe Lau’s Critical Thinking Web). In the same time, it is, for different reasons, a complex and demanding task (as illustrated by examples here and here).

Our novel idea is to break down the argument reconstruction process into smaller, well-defined text-to-text sub-tasks, which can be carried out step by step.

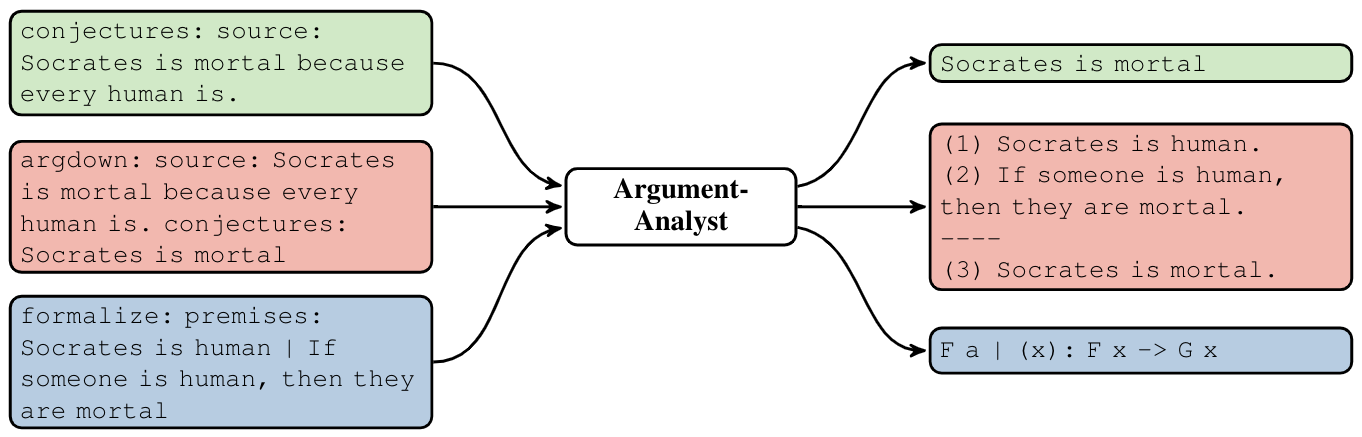

The following figure illustrates some such tasks:

By chaining the sub-tasks in one way or another, we may emulate alternative reconstruction strategies. For example:

Straight reconstruction:

- Given the source text, reconstruct the argument (argdown snippet).

- Given the source text, identify the premise statement(s).

- Given the source text, identify the conclusion statement(s).

Hermeneutic cycle:

- Given the source text, reconstruct the argument (argdown snippet).

- Given the source text and the reconstructed argument (from step 1), identify the premise statement(s).

- Given the source text and the reconstructed argument (from step 1), identify the conclusion statement(s). Then, throw away the source text and:

- Given the identified premise statements (from step 2) and the conclusion statements (from step 3), re-reconstruct the argument (argdown snippet).

Now, enter NLP. We create a synthetic dataset – AAAC (browse here) – that contains comprehensive logical analyses of informal argumentative texts, as well as tailor-made metrics for assessing the exegetic and systematic quality of a reconstruction. And we fine-tune a T5 language model on this dataset, training the model effectively on all the previously defined sub-tasks of argument analysis. Consequently, the trained model – ArgumentAnalyst – can emulate different reconstruction strategies, too. We can virtually program the model to tackle an argumentative text in a specific way:

Our most important finding is that our modular framework helps to improve the performance of ArgumentAnalyst. In particular, advanced reconstruction strategies like hermeneutic cycle clearly outperform naive strategies. Moreover, the modular framework allows to explore the extent of underdetermination in a specific problem (there is typically more than one legitimate interpretation of an argumentative text) and, furthermore, provides informative second-order evidence for characterizing a given text (failure to come up with a coherent comprehensive reconstruction suggests that the original argument is flawed).

We’re clearly far from having solved artificial argument reconstruction. But we submit that this a step in the right direction.

Written on December 6th, 2021 by Gregor Betz